Nowadays, mastering the art of prompt writing has become an essential skill.

Whether you're a translator, interpreter, project manager, localization manager, or any other role in the language industry, the right prompt can save hours of work, spark creativity, and unlock powerful results.

But what makes a good prompt truly effective?

To answer that, we turned to industry experts, seasoned professionals who use AI tools daily to streamline tasks and enhance productivity.

In this article, they share their top tips, techniques, and real-world insights to help you craft better prompts and get more done.

Whether you're just starting or looking to level up, these 10+ expert-backed tips will transform the way you interact with AI.

Whether you're a translator, interpreter, project manager, localization manager, or any other role in the language industry, the right prompt can save hours of work, spark creativity, and unlock powerful results.

But what makes a good prompt truly effective?

To answer that, we turned to industry experts, seasoned professionals who use AI tools daily to streamline tasks and enhance productivity.

In this article, they share their top tips, techniques, and real-world insights to help you craft better prompts and get more done.

Whether you're just starting or looking to level up, these 10+ expert-backed tips will transform the way you interact with AI.

1. A Trial-And-Error Process

First of all, we should consider prompting as a “trial-and-error process”.

This insight reflects a core reality of working with AI: it’s less like programming and more like having a conversation with an unpredictable but powerful collaborator.

Even well-structured prompts can yield unexpected or incomplete responses, and that’s not necessarily a failure, but part of the process. Over time, you’ll learn how small adjustments can dramatically improve results.

We’ve asked Andrés Romero Arcas, Language Technology Expert, Linguistic Engineer, and Machine Translation and AI Specialist at Acolad Group, about the best tips to craft a good-quality prompt, and that’s what he suggested:

2. Reviewing the Output Critically

Talking about reviewing the output critically, that’s exactly what Dorota Pawlak, AI Trainer and Localization Consultant, highlighted as one of her top tips for crafting better prompts and boosting productivity.

Drawing from her hands-on experience, she shared a practical set of suggestions that go beyond just writing good prompts.

From being specific and including context to leveraging advanced techniques and customizing tools for repetitive tasks, her advice offers a comprehensive approach to working smarter with AI.

Here’s what she recommends:

Drawing from her hands-on experience, she shared a practical set of suggestions that go beyond just writing good prompts.

From being specific and including context to leveraging advanced techniques and customizing tools for repetitive tasks, her advice offers a comprehensive approach to working smarter with AI.

Here’s what she recommends:

This last point highlights how AI can support linguists in far more than just linguistic or translation tasks: it can also streamline project workflows, automate repetitive administrative steps, and even facilitate collaboration across teams. By building custom GPTs tailored to specific needs, linguists can create efficient, reusable tools that handle everything from terminology checks to content structuring, freeing up time for more strategic or creative work.

3. Don’t Limit Yourself to Linguistic Tasks

When crafting a prompt, we should not limit ourselves to linguistic tasks.

Ekaterina Chasnikova, trainer and evaluator of generative AI tools, has emphasized this point repeatedly.

Not by chance, she hosts both our AI courses called “Certificate in AI for Translators & Interpreters: Prompt Engineering, Tools and Applications”, with both live activities and practice with AI tools, or the free course called “Basics of AI & AI Prompting for Language Professionals”, which covers foundational concepts in AI and prompting.

Ekaterina Chasnikova, trainer and evaluator of generative AI tools, has emphasized this point repeatedly.

Not by chance, she hosts both our AI courses called “Certificate in AI for Translators & Interpreters: Prompt Engineering, Tools and Applications”, with both live activities and practice with AI tools, or the free course called “Basics of AI & AI Prompting for Language Professionals”, which covers foundational concepts in AI and prompting.

Ekaterina consistently encourages going beyond language-specific uses. When asked about her best tips for creating effective prompts, she shared what follows:

Mastering prompt creation is a dynamic, iterative process that extends well beyond translation and linguistic tasks, unlocking the full potential of AI to support every step of language professionals’ workflows. Moreover, prompts can also be used to consider and try the end-user comprehension, a step that comes at the end of our translation or localization process.

4. Simulate End-User Comprehension

End-user comprehension, quality control, and translation workflows can all be significantly streamlined through well-crafted AI prompting. Today, translators and linguists alike are increasingly called upon to harness the full potential of large language models (LLMs) by developing precise, task-specific prompts.

By doing so, they can establish clear criteria that not only improve accuracy and consistency but also elevate the overall quality of their work, making their processes more efficient and virtually flawless.

To help develop our skills in AI prompting, Almira Zainutdinova, Linguistic Services Lead at Técnicas Reunidas, LLM Trainer, and writer at Meer, shared with us her personal tips for AI prompting with real-life examples.

By doing so, they can establish clear criteria that not only improve accuracy and consistency but also elevate the overall quality of their work, making their processes more efficient and virtually flawless.

To help develop our skills in AI prompting, Almira Zainutdinova, Linguistic Services Lead at Técnicas Reunidas, LLM Trainer, and writer at Meer, shared with us her personal tips for AI prompting with real-life examples.

As you can see, by explicitly specifying target locale conventions, integrating terminology glossaries, and defining tone, style, and register, you provide the LLM with clear, actionable instructions that align its output with your project’s unique requirements.

Combining these precise prompt elements with thorough quality checks, such as side-by-side accuracy reviews, consistency assessments, and end-user comprehension simulations, ensures translations that are not only linguistically accurate but also culturally appropriate and contextually relevant.

Combining these precise prompt elements with thorough quality checks, such as side-by-side accuracy reviews, consistency assessments, and end-user comprehension simulations, ensures translations that are not only linguistically accurate but also culturally appropriate and contextually relevant.

5. Use a Precise and Detailed Template for Better Results

When working with AI prompts, one of the most frequent questions professionals ask is: “Is there a go-to template I can use to get consistently great results?”

The truth is, many users search for a precise and detailed prompt template that can be adapted across projects, saving time and boosting accuracy.

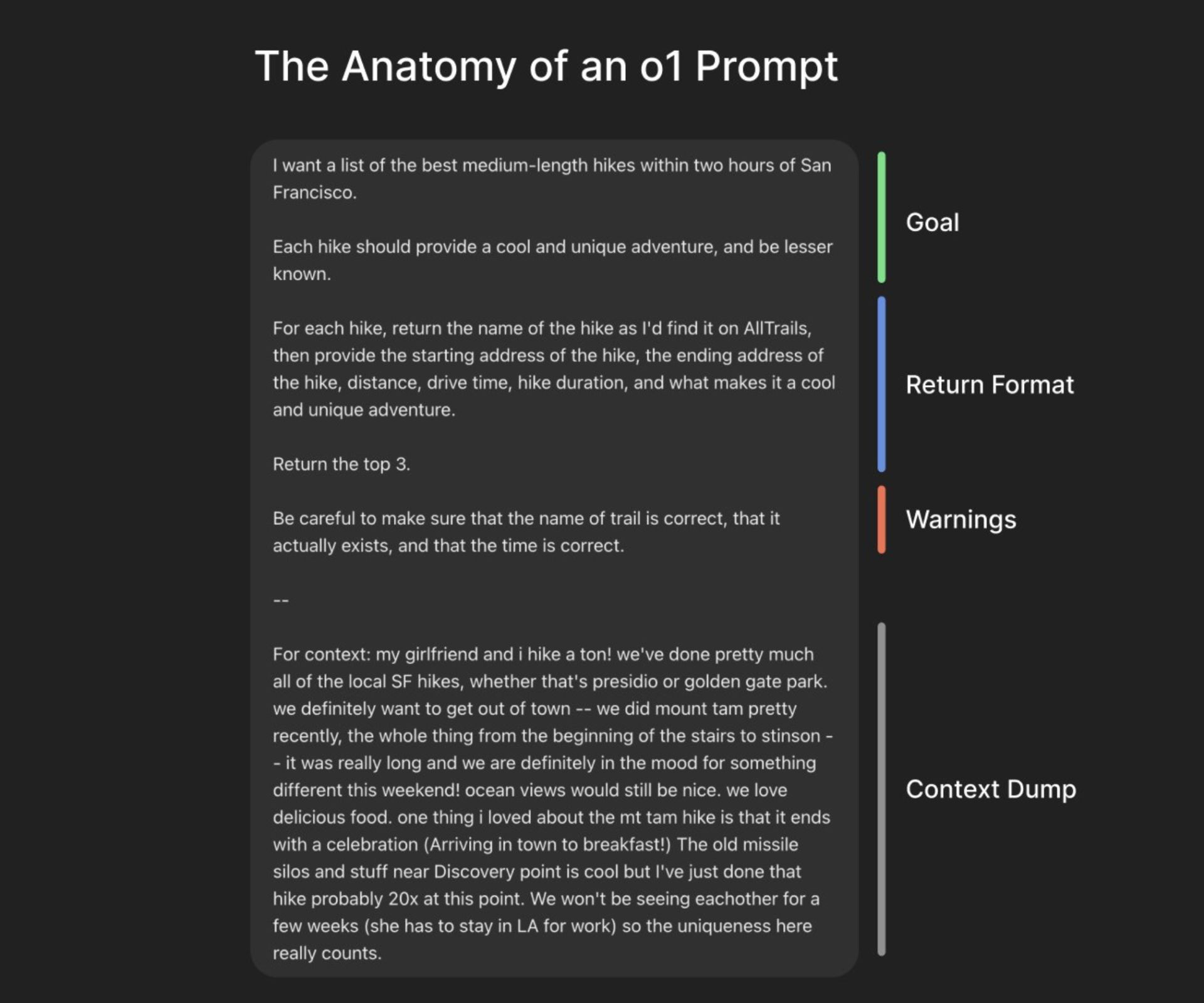

Fortunately, there is one. A clear and effective prompt template was shared by Ben Hylak on Latent Space, in collaboration with swyx and Alessio.

In their article, “o1 isn’t a chat model (and that’s the point)”, we can see how Ben Hylak turned “from o1 pro skeptic to fan by overcoming his skill issue”.

The full article offers valuable context behind the template’s development and practical application.

The truth is, many users search for a precise and detailed prompt template that can be adapted across projects, saving time and boosting accuracy.

Fortunately, there is one. A clear and effective prompt template was shared by Ben Hylak on Latent Space, in collaboration with swyx and Alessio.

In their article, “o1 isn’t a chat model (and that’s the point)”, we can see how Ben Hylak turned “from o1 pro skeptic to fan by overcoming his skill issue”.

The full article offers valuable context behind the template’s development and practical application.

Conclusion

Crafting effective prompts isn’t just a technical skill, but a creative, iterative process that blends clarity, strategy, and critical thinking.

As we've seen through expert insights and practical examples, strong prompting goes far beyond basic instructions. It requires understanding the task deeply, providing the right context, and continuously refining based on the AI’s responses.

Despite the useful courses you’ve already seen above, consider enrolling in our Master in AI and Innovation for Localization, where you’ll find a complete understanding of AI and its possible applications in translation, content creation, audiovisual projects, project and localization management tasks and much more.

As we've seen through expert insights and practical examples, strong prompting goes far beyond basic instructions. It requires understanding the task deeply, providing the right context, and continuously refining based on the AI’s responses.

Despite the useful courses you’ve already seen above, consider enrolling in our Master in AI and Innovation for Localization, where you’ll find a complete understanding of AI and its possible applications in translation, content creation, audiovisual projects, project and localization management tasks and much more.

If you liked this article, read also: